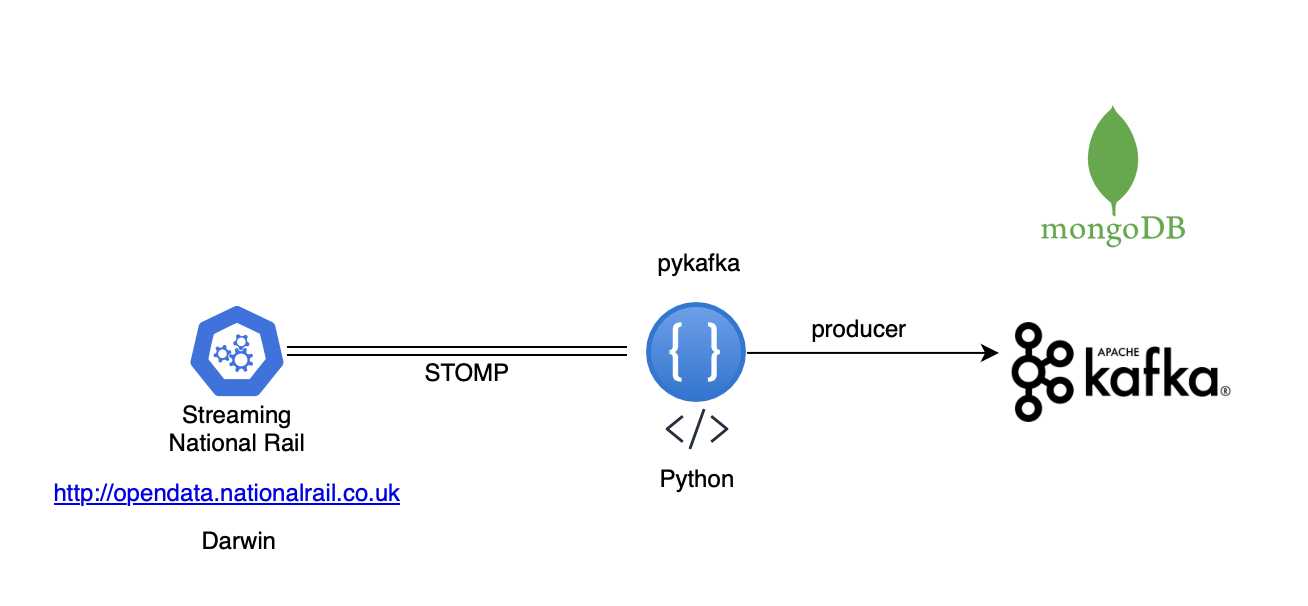

Using STOMP protocol to stream data with Kafka and MongoDB

In this research, I aimed at a streamlined architecture that can support a huge amount of data using NoSQL and Kafka inside a strange world which I don’t have much experience in the real world. The streaming pipeline is a little bit different from batch, however, at the same time is almost the same idea (at least for me).

Technical solution

Design of the architecture:

Requirements

To use this repo, you will need the following:

- Clone this repository to your local machine.

- Install the libraries: pykafka, pymongo, stomp-py, requests, xmltodict.

- Register on the dataset provider: https://opendata.nationalrail.co.uk.

- Docker compose.

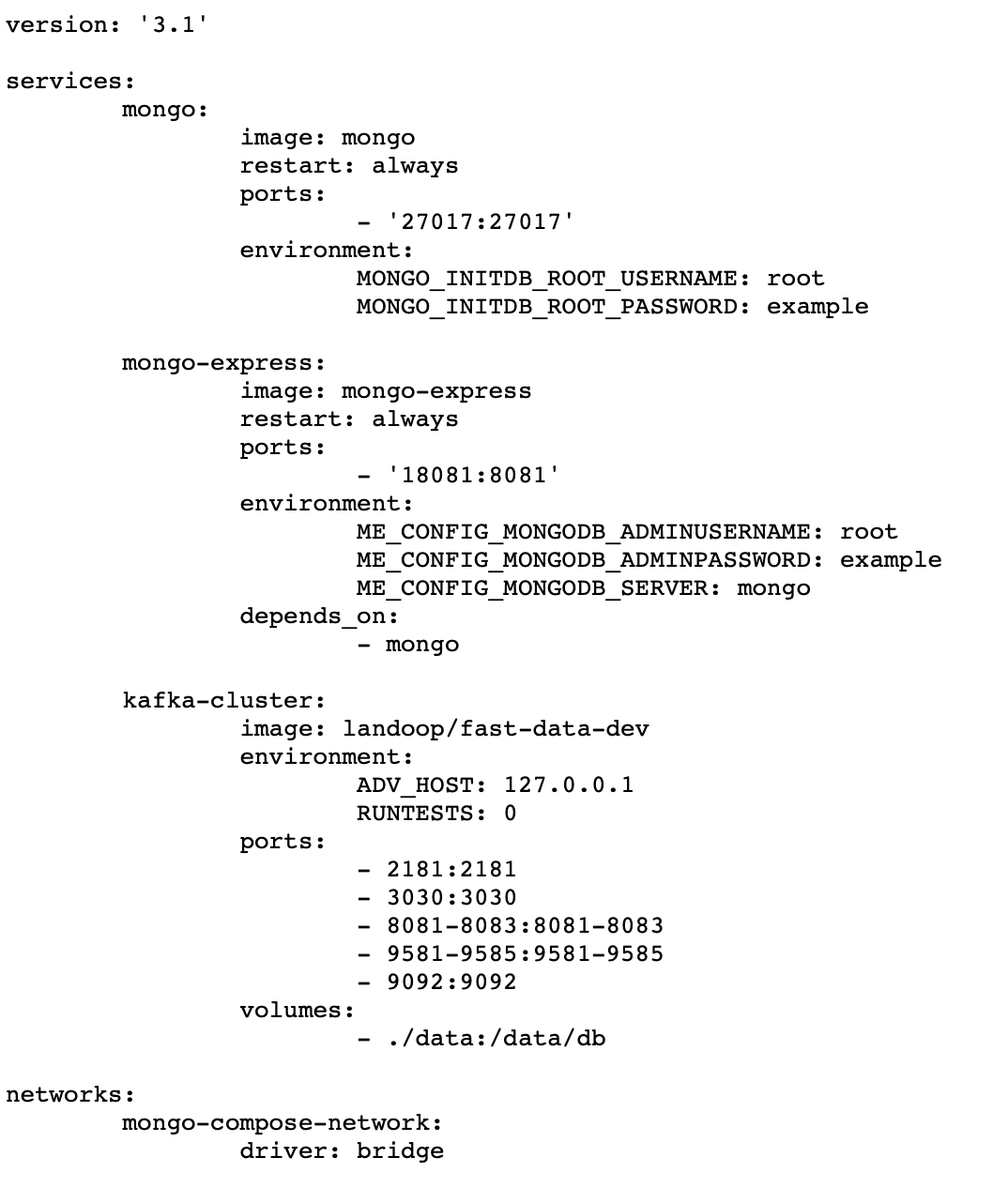

Docker Compose

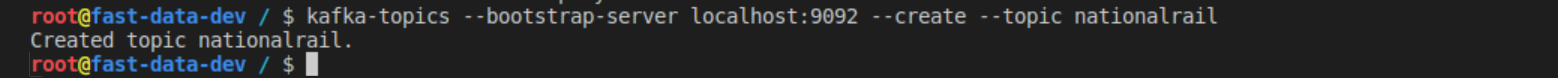

Kafka

First step after the docker mount is to create the Kafka Topics.

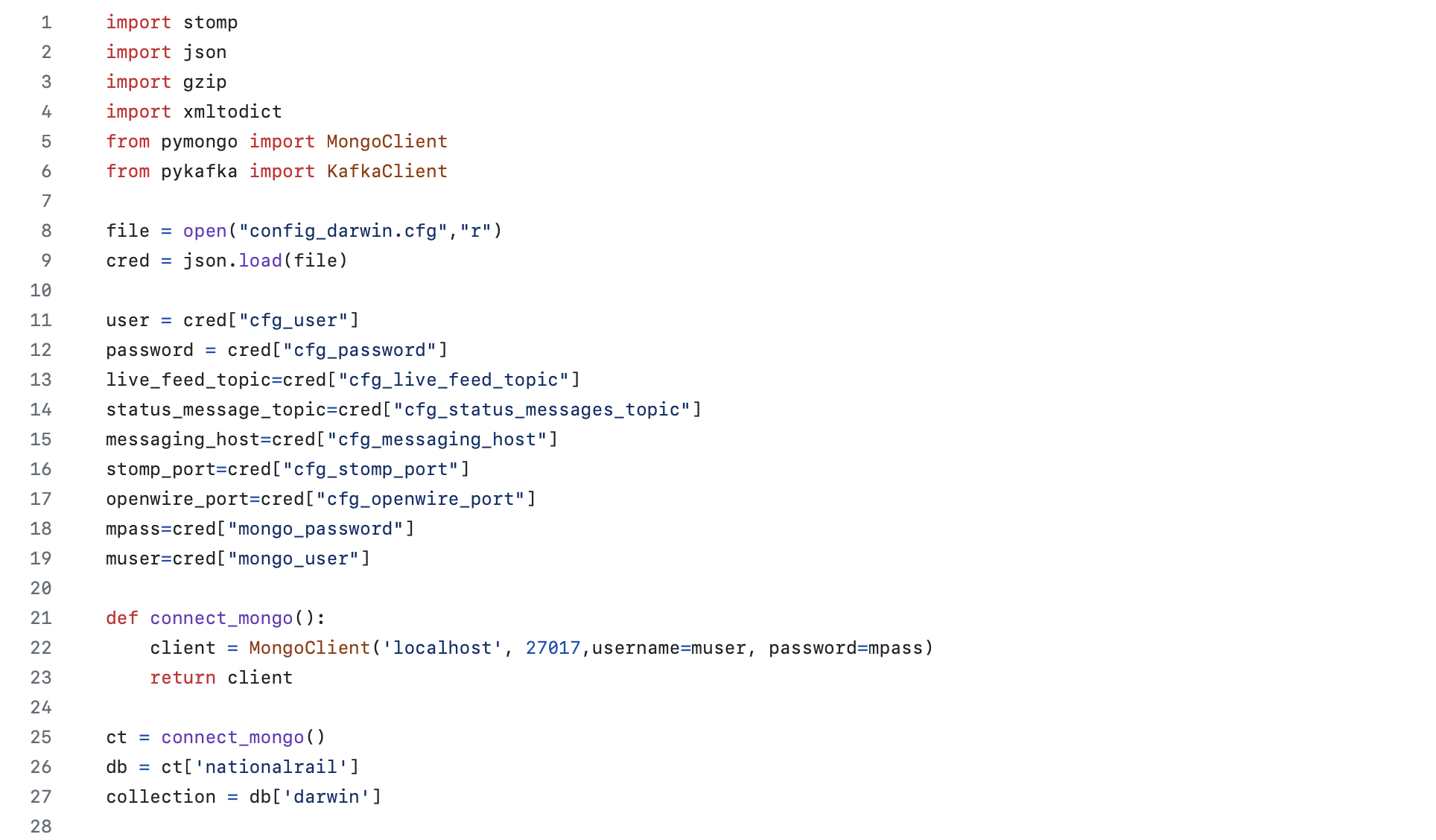

Configuration file

Configure the file with the user & token provided by the National Rail open data:

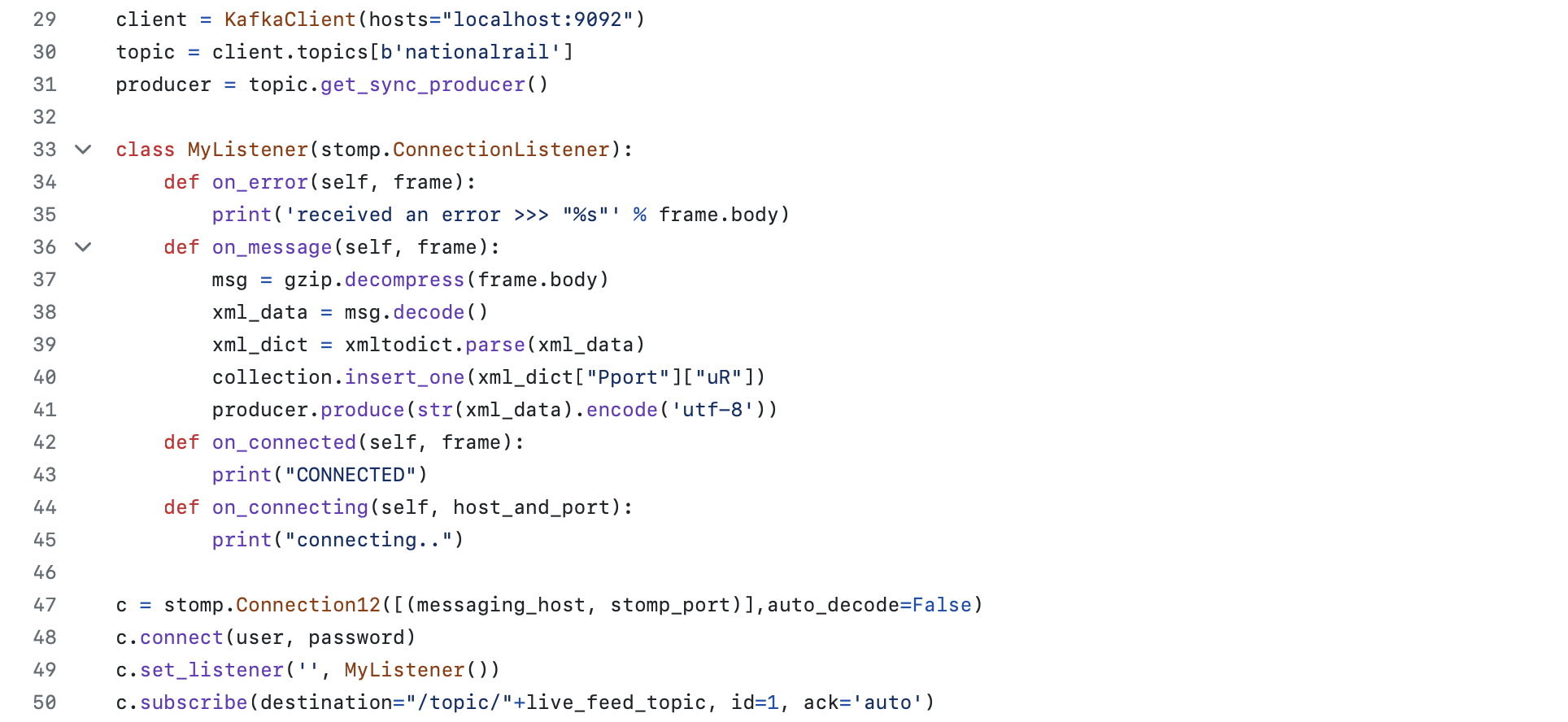

Streaming it!

This is a simple code that reads from the Queue and send it to MongoDB and Kafka Topic.

Testing - Result

More

- GitHub Repo here.